- Nvidia CEO Jensen Huang is hesitant to discuss Artificial General Intelligence (AGI) due to repeated misinterpretations of his views.

- Concerns exist about the potential impact of AGI surpassing human intelligence and potentially conflicting with human values.

- Huang argues that timelines for AGI are meaningless without a clear definition of what AGI actually is. He proposes specific benchmark tests as a way to define and potentially achieve AGI within a set timeframe.

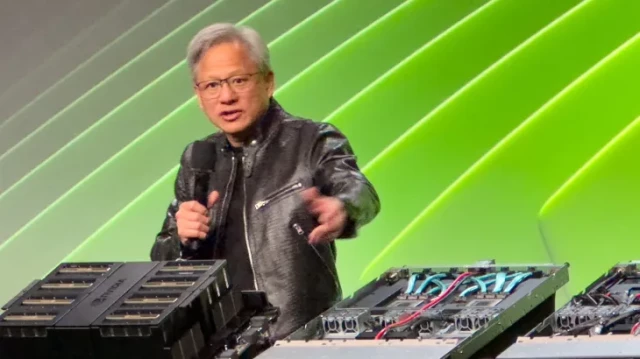

At Nvidia's annual GTC developer conference this week, CEO Jensen Huang seemed a touch weary of discussing Artificial General Intelligence (AGI).

He blames repeated misquotes for his wariness, but the topic itself is a hotbed of speculation and concern.

AGI, also referred to as "strong AI" or "human-level AI," promises a future where machines can handle a vast array of cognitive tasks as well as, or better than, humans. Unlike narrow AI, which excels at specific jobs like image recognition or writing website code, AGI is a game-changer.

However, the very potential of AGI sparks existential questions. If machines can surpass human intelligence, how will this impact humanity? What if their decision-making processes don't align with our values? Science fiction has explored these anxieties for decades, painting dystopian scenarios where super-intelligent machines become uncontrollable.

For some in the media, these anxieties translate into click-bait headlines demanding timelines for AGI's arrival. When pressed on this topic, Huang points out the absurdity of such questions. He uses analogies to illustrate his point. We know exactly when the New Year arrives because we all agree on how to measure time. Similarly, you know you've reached your destination when you see the giant banners.

"The key is having a clear definition of what we're aiming for," says Huang. He proposes that if we could define AGI very specifically, perhaps by creating benchmark tests, then a timeline might be possible. For example, if AGI meant scoring slightly better than most humans on a specific exam, like the bar exam or a pre-med test, then achieving that within 5 years might be feasible. However, without a clear definition, such predictions are meaningless.

Huang also addressed concerns about "AI hallucination," where AI systems fabricate seemingly plausible but factually incorrect answers. His solution is refreshingly straightforward: ensure answers are well-supported by research. Essentially, it's akin to basic media literacy – scrutinising the source and context, comparing information to facts, and discarding unreliable sources entirely. "The AI shouldn't just produce answers," argues Huang, "it should research first to determine the best possible response." For critical topics like healthcare advice, consulting multiple credible sources is essential. This may also mean the AI needs the ability to admit it lacks knowledge or can't reach a definitive conclusion. In some cases, a simple "I don't know" or "The answer is uncertain" might be the most responsible response.